Why AI is changing the physical design of data centres

By Darren Watkins, chief revenue officer at VIRTUS Data Centres

Artificial intelligence (AI) is no longer confined to research labs or pilot projects. It’s now a standard part of business operations, from fraud detection and healthcare diagnostics to real-time translation and customer service. And, as AI workloads expand, organisations are running into a practical challenge that cannot be solved with better algorithms alone, the physical infrastructure needs a fundamental upgrade.

Most enterprise IT was built around predictable workloads. Office software, ERP systems and corporate websites all consumed relatively stable amounts of power and cooling. Even when activity spiked, facilities could handle the load. AI changes that equation completely. Training a large model can involve thousands of graphics processing units (GPUs) working continuously for weeks, each rack drawing many times more power than a conventional server. Inference workloads, where models are deployed into production, also add relentless pressure by running 24/7.

This is why so many AI projects stall once they move beyond the prototype stage. Companies may have the data, the people and the algorithms, but if the underlying environment cannot sustain the workload, progress grinds to a halt. Retrofitting traditional data halls is often uneconomic and complex. Instead, purpose-built facilities are emerging as the foundation for AI at scale.

Density, cooling and power

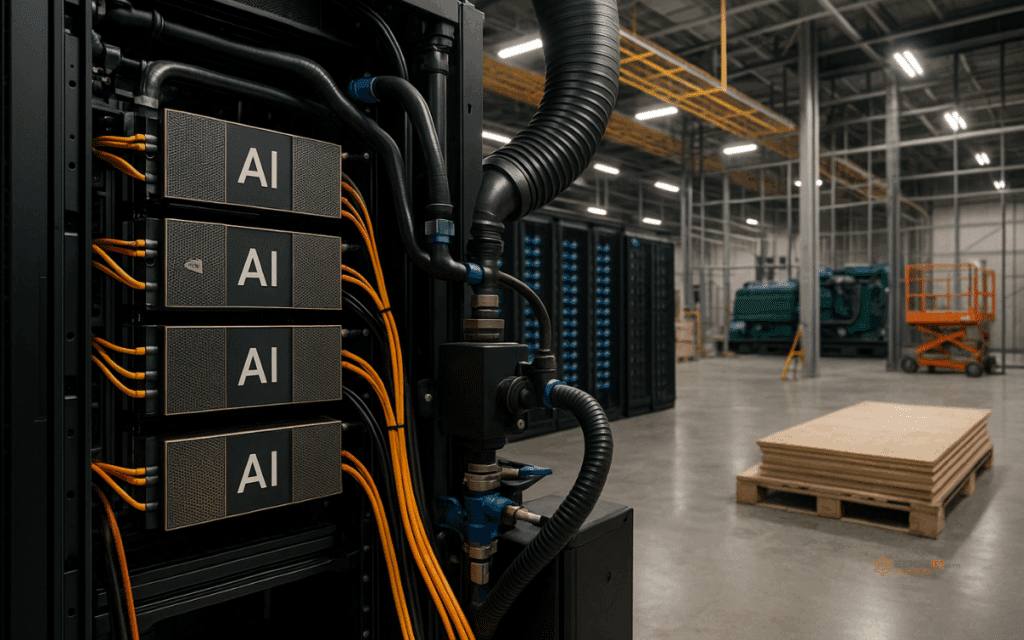

The most obvious difference between AI-ready sites and legacy environments is density. Traditional enterprise racks consume 2 – 4 kilowatts of power. Modern AI racks can require 50 – 80 kilowatts or more. That changes everything about the way a hall is designed, from electrical systems to airflow.

Cooling is the second critical factor. Air cooling, once sufficient for business IT, is pushed to its limits by AI hardware. Direct-to-chip liquid cooling and immersion systems are becoming essential, and these need to be designed into the facility from the beginning. Retrofitting pipework, pumps and containment into an existing building is possible but disruptive and costly.

Power distribution also has to evolve. Delivering high-density loads reliably means redundant distribution paths, intelligent uninterruptible power systems (UPS) and the ability to allocate power at rack level. Without this kind of design, facilities risk bottlenecks that undermine performance.

Why location matters

Compute capacity is only part of the picture. Performance also depends on where data sits. AI models are trained and deployed on information that can be spread across cloud platforms, enterprise systems and edge devices. If compute is located too far from the data source, latency rises and accuracy and customer experience can decline.

This matters for latency-sensitive applications. In finance, a few milliseconds can mean millions gained or lost. In healthcare, diagnostic models need to return results instantly to be clinically useful. In consumer markets, conversational interfaces or personalised recommendations are judged as much on speed as on quality.

As a result, data centre location is becoming a strategic choice. Proximity to major datasets and user bases is increasingly seen as an optimisation layer in the AI value chain, not just a matter of cost.

Flexibility for evolving workloads

AI roadmaps do not stand still. Models are retrained, datasets expand and regulatory requirements change. Infrastructure needs to reflect this dynamism. Facilities built for fixed workloads risk becoming obsolete within a few years.

Modern designs therefore emphasise flexibility. This includes the ability to scale racks from 20 kW to 100 kW or more without complete redesign, modular capacity that can be added without downtime, and workload portability across sites. In practice, flexibility enables facilities to remain useful as AI continues to evolve at pace.

The sustainability challenge

The energy intensity of AI is attracting growing attention. Estimates suggest that training a single advanced model can consume as much electricity as hundreds of homes over a year. With regulators and investors sharpening their focus on sustainability, this is no longer a side issue.

New facilities are addressing the challenge by connecting directly to renewable energy sources, reusing waste heat for district heating and applying AI itself to optimise cooling efficiency. Transparent reporting against ESG standards is also becoming the norm. Building with sustainability in mind is increasingly a licence to operate rather than an optional add-on.

From aspiration to physical reality

The lesson for enterprises is clear. AI strategies cannot succeed without the right physical foundations. Algorithms, talent and data may provide the vision, but only infrastructure designed for density, proximity, flexibility and sustainability can turn that vision into reality.

As AI becomes embedded across industries, the design of the data centre is no longer a technical afterthought. It has become a central enabler of competitiveness.

About the author

Darren began his career as a graduate Military Officer in the RAF before moving into the commercial sector. He brings over 20 years of experience in telecommunications and managed services gained at BT, MFS Worldcom, Level3 Communications, Attenda and COLT. He joined VIRTUS Data Centres from euNetworks where he led market changing deals with a number of large financial institutions and media agencies.

Additionally, he sits on the board of one of the industry’s most innovative Mobile Media Advertising companies, Odyssey Mobile Interaction, and is interested in new developments in this sector.

Article Topics

AI data centers | AI/ML | edge data center | GPU infrastructure | liquid cooling | VIRTUS Data Centres

Comments