AI inference moves closer to the grid as smaller data centers take shape

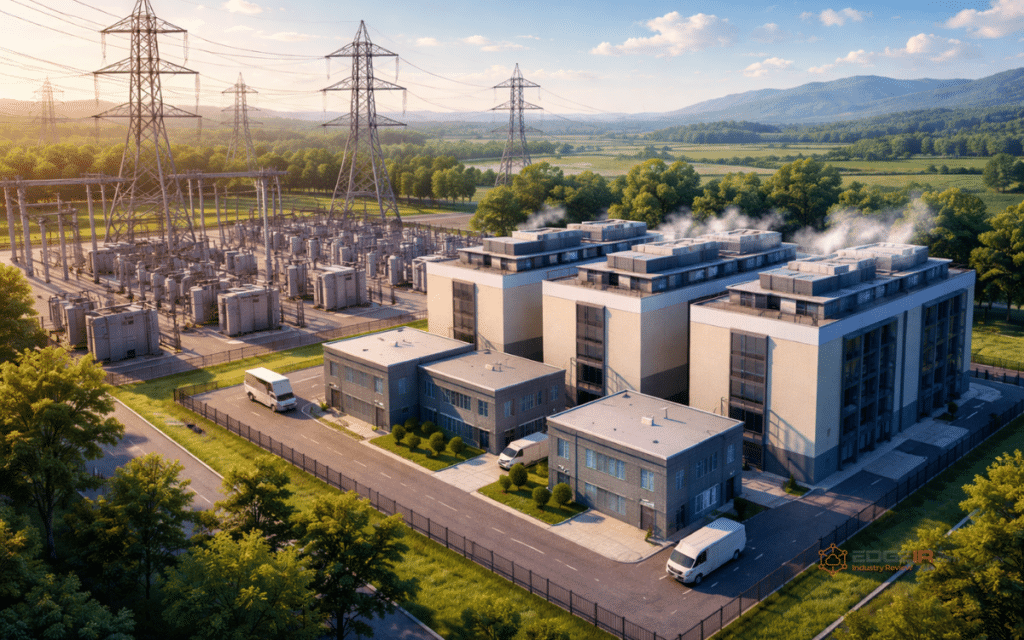

EPRI, NVIDIA, Prologis and InfraPartners have revealed they are working together to create smaller scale (5-20MW) distributed data centers closer to utility substations with available grid capacity that will perform AI inference.

It is hoped the project gets real-time AI processing closer to where the data originates in order to alleviate grid congestion while tapping into some of those underutilized resources.

At least five pilot sites are to be established in the U.S. by end 2026, establishing a model that can be scaled up.

“AI is driving a new industrial revolution that demands a fundamental rethinking of data center infrastructure,” says Marc Spieler, senior managing director for the Global Energy Industry at NVIDIA. “By deploying accelerated computing resources directly adjacent to available grid capacity, we can unlock stranded power to scale AI inference efficiently. This distributed approach, powered by NVIDIA accelerated computing, maximizes existing energy assets, helping to deliver the intelligence required to transform every industry.”

By placing computing capability at the substation, the project bolsters grid resiliency, increasing system flexibility and facilitating renewable energy integration.

EPRI provides technology capability in research validation and site identification, Prologis expertise in land site evaluation and commercialization while NVIDIA offers GPU-accelerated computing platforms and InfraPartners deploys data center infrastructure.

With a surge in demand for AI inference across various industries such as logistics, healthcare and finance, the partnership is designed to accommodate that demand by offering high-speed, reliable and location-specific compute power.

This decentralized method will utilize our current energy resources, ease the transmission bottleneck and facilitate the evolution of our energy system. The project demonstrates the necessity for revolutionary infrastructure services that is capable of efficiently meeting growing needs in energy and AI.

Armada and Nscale outline global hub-and-spoke model for Sovereign AI infrastructure

Article Topics

AI inference | edge AI | EDGE Data Centers | edge infrastructure | EPRI | InfraPartners | Nvidia | Prologis

Comments